Access the PDF version of this essay by clicking the icon to the right.

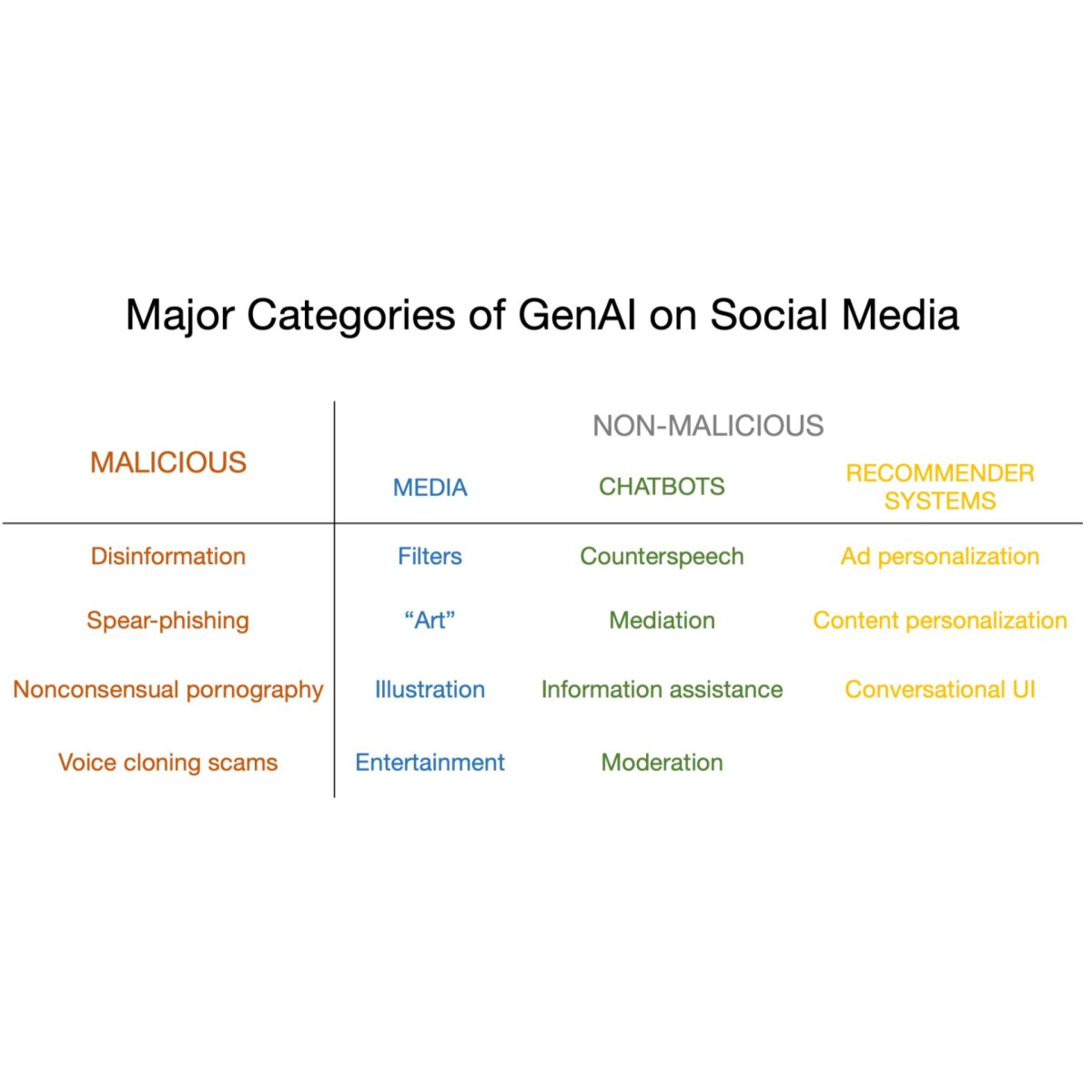

Journalists, technology ethics researchers, and civil society groups tend to focus on the harms of new technologies, and rightly so. But this results in two gaps. The first is an overemphasis on harms arising from malicious use. When it comes to generative artificial intelligence (AI) and social media, it’s at least as important to consider the harms that arise from the many types of nonmalicious yet questionable uses by companies, everyday people, and other legitimate actors. Another gap comes from the fact that public interest groups are often focused on resisting harmful deployments of AI, but at least in some cases, exploring pro-social uses might be productive.

This essay is an attempt to help close these two gaps. We begin with a framework for analyzing malicious uses by looking at attackers’ and defenders’ relative advantages. In some cases, the risk of generative AI radically improving malicious uses is overblown. In others, generative AI genuinely increases harms, and current defenses fall short. Next, we enumerate many nonmalicious uses that nonetheless pose risks and argue that a measured response is needed to evaluate their potential usefulness and harms. We describe a few ways in which chatbots can improve social media and call for research in this direction. We end with recommendations for platform companies, civil society, and other stakeholders.

I. Generative AI: New Capabilities for Bad Actors, or Just Cost Reduction?

Many people are concerned that social media will soon be awash in harmful machine-generated content. [1] Generative AI has indeed made creating and modifying synthetic media easier. The GIF below shows how easy it is to modify images in a matter of seconds.

An editing tool that allows users to modify images generated using AI. Source: The Atlantic [2]

But what, exactly, is new? Bad actors with expertise in Photoshop have long had the ability to make almost anything look real. It’s true that generative AI reduces the cost of malicious uses. But in many of the most prominently discussed domains, it hasn't led to a novel set of malicious capabilities.

How significant is the cost reduction? Let’s look at a few domains.

Disinformation

Disinformation is one of the most prominent concerns with generative AI. [3, 4] We agree that generative AI enables malicious users to create disinformation in the form of text, images, and video much more cheaply. It may also enable them to improve their cultural fluency when attempting to influence people in other countries. [7, 8]

Still, the cost of distributing misinformation by getting it into people’s social media feeds remains far higher than the cost of creating it—and generative AI does nothing to reduce this cost. In other words, the bottleneck for successful disinformation operations is not the cost of creating it. [9]

A good example is the hoax that used an AI-generated image to falsely claim that the Pentagon had been bombed. [10] Soon after the tweet was posted online, it was debunked as being AI-generated, including by Arlington County’s fire department. [11] The image was also unsophisticated: It had weird artifacts‚ including fence bars that blurred into each other. Still, it caused a brief panic, even affecting the stock market. [12]

A screenshot of the tweet that falsely reported an explosion near the Pentagon. Source: Twitter [13]

A screenshot of the tweet that falsely reported an explosion near the Pentagon. Source: Twitter [13]

But note that generative AI wasn’t a prerequisite for the hoax. A photoshopped image, or even an old image of a bombing could be enough. And malicious hoaxers intending to crash the stock market could certainly pay a digital artist for 10 minutes of their time to create a much more convincing image.

The hoax was only successful in sowing some panic because the hoaxer had a blue check on Twitter, making it appear like a legitimate news organization. Worse, it was retweeted by several verified accounts with hundreds of thousands of followers. Getting cheap and easy access to verified accounts was key to spreading the hoax.

This story isn’t about generative AI. If we pretend that it is, we lose focus on the real problem, which is Twitter wrecking a critical integrity feature of the platform.

Similarly, a DeSantis attack ad used AI generated images of Trump embracing Fauci in order to push a narrative. [14] But false narratives in political speech aren’t a novel phenomenon. We don’t doubt that many more deepfakes will be deployed during the 2024 election cycle. If those efforts are successful at reaching voters, one major enabling factor is that YouTube, Instagram, and other social media platforms have rolled back misinformation protections ahead of the election cycle. [15]

Finally, note that media accounts and popular perceptions of the effectiveness of social media disinformation at persuading voters tend to be exaggerated. For example, Russian influence operations during the 2016 U.S. elections are often cited as an example of election interference. But studies have not detected a meaningful effect of Russian social media disinformation accounts on attitudes, polarization, or voting behavior. [16]

Spear phishing

Another frequently mentioned malicious use of AI is spear phishing: personalized messages that target individuals and make it appear as if the message comes from a trusted source, in order to steal personal information or credit card details. [17]

It’s true that large language models (LLMs) make it easier to generate convincing spear-phishing messages. But analyses of spear phishing using LLMs tend to limit themselves to the challenge of getting people to open attachments or click on phishing links. [18] It’s natural to assume that those outcomes constitute a successful attack. However, that assumption hasn’t been true for the last 15 years. Modern operating systems have many protections that kick in if a user clicks on a file downloaded from the internet. [19] And browsers have deployed phishing protection starting in the mid-2000s. [20]

Besides, the email or message might not even reach the user—it is likely to be detected as phishing and blocked. [21, 22] The ability to vary the text of emails might make it harder, but only slightly (if at all). Detection methods already take into account the characteristics of the sender and other metadata. Besides, attacks need to have a payload (an attachment, a link, etc.), and defenses rely in part on detecting those.

As before, the hard part for bad actors is not generating malicious content; it’s distributing it to people. We already have effective defenses that make distribution hard, and the best way to address the risk of spear phishing is to continue to shore up those defenses.

A persuasive AI-generated spear phishing email using GPT-4, given only the target’s name and the knowledge that he is a researcher. Source: Author screenshot

We doubt that disinformation or spear phishing will be radically more effective owing to generative AI. But generative AI does enable other types of malicious uses. Let's look at two examples: nonconsensual imagery and scams enabled by voice or video cloning tools.

Nonconsensual intimate imagery (NCII)

One of the main malicious uses of generative AI has been to generate nonconsensual pornography. As of 2019, 96% of deepfakes shared online were pornographic, and over 90% of the victims were women. By July 2020, a Telegram group used to coordinate the sale of pornographic and sexualized images of women had over 100,000 deepfakes shared publicly. [23] Of course, that was before the latest crop of image generation and manipulation tools, so it’s possible that the number is far higher now.

Again, malicious users could always do this using Photoshop, but with generative AI, they don't have to spend nearly as much time or money on creating nonconsensual pornography. There are some new capabilities too. Creating deepfake videos used to be prohibitively expensive. It is now possible to create such videos on a personal laptop.

Nonconsensual deepfakes are harmful even if they don't scale: They lead to massive emotional toll. [24] On top of that, people have already been extorted using deepfakes, so they can lead to financial damage and reputational harm too. [25, 26]

There are a few defenses. Social media platforms have developed policies to remove NCII as well as manipulated images. Australia has a law that penalizes image-based abuse, and both the U.S. and the U.K. have bills with criminal penalties for deepfakes. [27-29] Online services like Stop NCII coordinate with social media platforms to remove nonconsensual images. [31]

Still, the psychological cost of being threatened with the release of one's intimate images, the slow pace of curbing their spread, and the high financial costs of going after deepfake creators mean that current defenses aren't enough to address nonconsensual deepfakes. [32-35]

Voice cloning for scams

Generative AI tools to fake peoples' voices are on the rise. Several such tools offer people the ability to clone someone's voice using only a minute-long recording of their voice. [36, 37]

Unsurprisingly, scammers are one of the early adopters. Scammers use voice cloning tools to impersonate family members or professional colleagues and ask victims to transfer money, often implying that the request is necessary and urgent.

The combination of an urgent demand and an accurate mimicry of peoples' voices can be enough to convince victims that the request is legitimate. An employee transferred 220,000 euros into a foreign bank account thinking his boss had authorized him to wire the money. [38] The elderly parents of a 39-year-old paid 21,000 Canadian dollars to scammers thinking their son was in urgent need of funds for legal fees. [39] And the parents of a 15-year-old were tricked into believing their daughter was kidnapped and were asked for a $1 million ransom (they found out their daughter was safe before making the payment). [40]

Like other technology harms, the impact of such scams is uneven across populations. For instance, seniors are already disproportionately targeted by scammers. Due to poor digital literacy, the efficacy of deepfake scams could be even higher in this population. [41]

Voice cloning tools do offer a new capability—earlier tools for cloning people's voices weren't nearly as good, neither were they as easily available as tools that use generative AI. As a result, real-time voice cloning was not a possibility. Another factor that makes such attacks effective is that like NCII (and unlike disinformation and spear phishing), scams need neither scale nor widespread distribution to be harmful.

Still, there are some potential fixes, like better detection and filtering of scam calls, so that these calls never reach their targets. And educating people to avoid such scams is likely to be the most robust (if difficult) intervention.

Making it harder to generate fakes is neither feasible nor useful

There have been many attempts at creating technical solutions to detect and watermark AI-generated content. The Coalition for Content Provenance and Authenticity has launched a verification standard for images and videos that confirms they originate from the source claiming to have created them. [42] A study on such verification standards found that while users did indeed question tampered media, in some cases, they also disbelieved honest media as a result of a lack of provenance information. [43]

At any rate, this is an opt-in standard that bad actors will simply ignore. And despite being in development in some form for over four years, it hasn't seen any meaningful adoption. So we're not optimistic about this proving to be a viable solution to detect AI-generated content.

A different approach is watermarking, which modifies generated text or media in ways that are completely imperceptible to a person but can be detected by AI using cryptographic techniques. [44] Some of the major generative AI tools, including Stable Diffusion and possibly ChatGPT, include watermarks in the output. [45, 46] Watermarks are accurate, but there are many capable open-source LLMs and image generators (including Stable Diffusion). Bad actors can simply remove the watermarking feature. And they could also use a nonwatermarked model to rephrase the output from a watermarked model—for instance, LLaMA could be used to rephrase a watermarked output of a more capable model.

Yet another approach is to use classifiers to determine if a piece of text to classify is AI-generated based on statistical patterns, even in the absence of a watermark. [47] But these classifiers are inaccurate: OpenAI's classifier fails to detect about three-quarters of AI-generated text. [48]

And while current classifiers can detect AI-generated images and videos, we feel that this isn't a sustainable approach, for two reasons. [49] First, AI-generated content is a spectrum: An image or video could be generated entirely using AI, or heavily modified, or lightly touched up. Detecting content generated entirely by AI is probably easier than detecting content that has been edited. Second, we expect the capabilities of image and video generation tools to continue getting better.

Given their severe limitations, the proliferation of AI detection tools may be harming more than helping. [50] A University of California, Davis student suffered panic attacks after a professor’s false accusation of cheating, before being cleared of the accusation. [51] A Texas A&M University–Commerce professor, Jared Mumm, threatened to fail his entire class after asking ChatGPT to determine if the students' responses were AI-generated. [52] These are not isolated examples. Twitter account @turnyouin collects horror stories of false accusations against students. [53]

A better approach

Social media platforms must develop infrastructure to combat the rise in synthetic media and bolster existing defenses. One option is to fingerprint known real, fake, and deceptive media that is shared across platforms and fact-checking organizations. [54] This could amplify the impact of third-party fact-checking: When a piece of content goes viral, it can be labeled as fake or misleading, or preemptively removed. If the disinformation actor then switches to another platform, that platform will immediately have access to the fact that the image is deceptive.

One possible downside of our approach is that it might push the costs of countering the harms enabled by generative AI companies onto third parties: third-party fact checkers, journalists, social media platforms, etc. Indeed, there is a long list of ways in which generative AI companies externalize costs. [55] This might make watermarking, AI detection, and other technical approaches seem attractive, since it represents AI companies attempting to take some responsibility for harms. But an ineffective approach is ineffective no matter how intuitively appealing.

Policy makers and civil society groups should look for other ways to force AI companies to internalize costs. For instance, in response to nonconsensual images being shared on social media, Facebook funded and provided the technical infrastructure for the nonprofit Stop NCII. [56] This allows victims to securely share their images and get them removed from various platforms. Similar efforts for AI-generated deepfakes could be funded by AI companies. Of course, such solutions are only a stopgap. There is also a need for systemic solutions such as increased taxation for AI companies that can allow publicly funded methods to address their harms.

Table 1: An overview of the malicious uses we discussed. Fuller rows imply that generative AI can be more harmful in those domains and more attention toward new defenses is needed.

The examples we’ve discussed so far aren't the only malicious uses of generative AI, and we agree that they are a cause for concern. But a narrow focus on malicious uses can distract from two other uses of generative AI on social media: harmful uses that arise due to carelessness (instead of malice) and potentially positive uses.

In the next few sections, we describe the variety of nonmalicious ways in which generative AI could be used on social media. Our aim is to explore the myriad of possibilities of generative AI. Instead of reacting passively to deployments of generative AI by big tech companies, we need to take a more proactive role in researching and deciding what applications are likely to be useful and which ones are a bad idea.

II. Four types of nonmalicious synthetic media

Synthetic media refers to any media produced or modified using digital technology. We’ve seen a few malicious examples, but there are many benign, or even positive, examples of synthetic media. For instance, the video game industry is in the business of creating synthetic media for entertainment. Video game creators are already using generative AI for things like creating rough mock-ups which are then refined by artists. [57]

A recent demo by Nvidia on conversationally interacting with in-game characters. The characters' responses and video are created using generative AI. Source: NVIDIA [58]

Filters

Filters on TikTok and Instagram are technically synthetic media. They allow users to edit their images using automated tools. Early filters were somewhat simplistic. They could edit the brightness, contrast, and color of an image. More recently, platforms have started using AI-based filters, such as those that use generative adversarial networks. [59] And Google recently introduced the ability to edit photos using AI, for instance, by removing people from backgrounds. [60]

Google’s image editing tool can move objects and even automatically fill in newly revealed background areas. Source: Google

Even simple filters have made the social comparison crisis worse by affecting users’ body image and leading to negative comparisons with peers. [61, 62] Recent filters like Bold Glamour could take this even further. [63] And now that we have the technology to make every photo and every vacation look perfect—say, by turning a cloudy day into a sunny one—how will this change what people post and what effect will that have on mental health?

This is a good example of the fact that many of the harms of generative AI on social media aren’t from malicious use and the response needs to be more nuanced than trying to ban them. AI-based filters are also a good reminder that any social media policy or regulation that relies on treating AI-generated content differently from regular content will run into a tricky definitional challenge. There is a fine spectrum of content between entirely human-created and entirely machine-generated, with no obvious place to draw the line.

“Art”

Images created using generative AI models like DALL-E, Stable Diffusion, and MidJourney have swamped social media. [64] Platforms have jumped on the trend: TikTok is experimenting with generative AI avatars, and Instagram is reportedly testing generative AI stickers. [66, 67] Whether or not AI image generation is art is debatable because it is not primarily a product of the creative efforts and expression of an artist. But this question is tangential to our point.

Most text-to-image models are trained on art without the creators' consent. [55] Artists fear losing commissions for jobs such as creating logos or company branding. Models like Adobe's Firefly, which are trained on licensed or public domain data, help address the concern about consent. [68] But they do nothing to address the labor displacement that arises because of generative AI. [69]

Adobe's Firefly model can now be used in Photoshop to edit images. Source: Adobe

Illustration

Amnesty International recently came under fire for using AI-generated images to illustrate police brutality in Colombia's 2021 protests. [70] Amnesty’s argument was that real images from protests could be used against protesters, whereas AI-generated images would help protect protestors while still illustrating the brutality. But given people’s concerns about fake news and news media's crisis of credibility, such tactics could hurt more than they help. After receiving criticism over their use of AI, Amnesty International removed these images.

The AI-generated image used by Amnesty International to illustrate police brutality. Source: The Guardian [70]

Still, there are other places AI-generated imagery could be useful, for instance, to create stock photographs. [71] One demo shows the potential of generative AI in altering the faces of people captured in street photography to maintain their privacy. [72] And advertisers are already embracing generative AI (we’ll return to this below). What's clear is that we need to urgently develop norms and policies around using generative AI in such applications, instead of a laissez-faire approach to adopting AI.

Entertainment

In addition to text-to-image models, there are also models being created to generate videos from text. From established companies like Meta, Google, and Nvidia, to startups like Runway, there are several teams working on text-to-video tools. [73–76] Such tools are currently limited. Most videos are short, and they lack the fidelity of text-to-image models, though they are quickly getting better.

Two examples of synthetic content.Left: A popular TikTok channel posts synthetic videos of vehicle crashes. Source: Author screenshot

Right: A Twitter bot colorizes black-and-white photos posted by others, in this case, a historical photo from 1916. Source: Twitter

Synthetic videos are likely to be more popular on platforms like TikTok, which are algorithmically mediated. On such platforms, it only matters what the content is, not who created it. So if generative AI can create content that people engage with when it shows up in their feeds—even if it is mindless content that people wouldn’t necessarily subscribe to—platforms are likely to recommend that more. We can already see this trend in action, and there are already synthetic videos with tens of millions of views. [78, 79]

There is a wide range in terms of creativity and potential harmfulness of AI-generated entertainment. Most of it is harmless, and at worst vapid. Some of it is thought provoking, such as a genre of videos depicting an alternate history where Western countries never achieved global power. [80] But some of it is deeply problematic, such as depictions of murder victims as entertainment. [81]

III. Pro-Social Chatbots

Pro-social chatbots are designed to promote positive social interactions. They could be integrated into social media and communication platforms. Here are four that we are thinking about. These are good avenues for research, but given the well-known limitations of language models, it isn’t currently clear if these will work well enough to be useful. Even if they do, there are hidden risks.

Counterspeech

Misinformation and hate speech remain big challenges on social media. While posts that violate platforms' content policies are taken down, there are often gray areas or borderline posts that are not removed. One way to respond to such posts is counterspeech: platforms or users could add context or challenge the claims in the original post. [82] Even if it doesn't change the speaker’s mind, it could affect the viewers of the content.

For instance, Twitter's Community Notes feature allows contributors to add context to tweets with misleading claims. [84] When enough contributors from different points of view agree with the content in a note, it is made public and attached to a tweet. Still, only a small fraction of problematic tweets seem to have notes attached, probably because of the limited number of active contributors. [85] To address this limitation, automated methods could be used to identify potential misinformation and draft corrective text—which could then be reviewed by humans and put through the consensus-based voting process. And compared to community notes, chatbots could also be used to engage in two-way dialog with users, instead of providing information passively.

An example of Twitter's Community Notes feature in action. Source: Twitter

An example of Twitter's Community Notes feature in action. Source: Twitter

One shortcoming is the tendency of LLMs to fabricate (or "hallucinate") information, though there is progress on improving factual grounding. [86, 87] Even if the corrective text is vetted by people, it could still pose risks. For instance, people tend to overrely on automated decisions (this is known as automation bias). [88] So such a system could lead to incorrect labels despite being vetted.

Finally, automated counterspeech poses risks even if it is accurate and effective. Authoritarian regimes could exploit the same techniques and tools to try to silence dissent.

Conflict mediation

Chatbots could enable more productive conversations by helping people rephrase their comments to be more respectful, translating between different languages, or reminding users of community rules or content policies. [90]

For instance, there are several known techniques to reduce divisiveness in conversations between people who disagree, including restating or validating their opinion before responding. An online experiment found that using chatbots to rephrase messages in this way in a conversation on gun control reduced political divisiveness: People felt more understood and respected when they received messages rephrased using chatbots. [91] Importantly, the rephrasing didn't change the content of the messages they received.

Again, many difficult questions must be tackled before deploying such a system in practice. Who decides which conversations are contentious and which ones aren't? How often are users nudged to rephrase their conversations? And if people use these chatbots, will their beliefs change over time? (Initial evidence suggests this is the case. [92] ) More fundamentally, we risk glossing over deep political divides with surface-level solutions.

Moderation

When a user's post on social media violates the rules, it is often removed with little or no explanation. Some platforms allow users to appeal these decisions, but appeals can take a long time to adjudicate and are costly for the platform. A chatbot could explain how the post broke the rules—even before it is posted—and help the user edit the post accordingly. A conversation would allow the user to understand content policies better.

ChatGPT provides an explanation of a moderation decision. Source: Author screenshot

To be accurate enough to deploy, platforms must fine-tune chatbots on past examples of moderated content. This is already how classifiers for removing violating content are trained.

If large language models are used for making moderation decisions and not just explaining them, there is a danger of jailbreaking. Clever prompts could be used to reinstate problematic content by “convincing” a chatbot that it doesn’t violate the platform's rules.

If chatbots are used to make content moderation decisions, users may be able to trick them. Source: Author screenshot

If chatbots are used to make content moderation decisions, users may be able to trick them. Source: Author screenshot

Information assistance

A simple use case for pro-social chatbots is to answer factual questions. If two people are debating a factual point, say the relative energy cost of electric and gas vehicles, a bot could find and summarize research on the topic.

This is similar to tools already found on platforms like Skype and Slack, both of which use OpenAI's LLMs to answer users' questions. [93, 94] While these tools are geared towards personal conversations or corporate queries ("Who should I talk to in HR for a work-related issue?"), they could just as easily be adopted to social media platforms.

Again, the usefulness of such bots is contingent on improvements in the factual grounding of responses. Note that while LLMs are notorious for frequently producing misinformation when answering questions, the task here is more straightforward: finding and summarizing relevant documents. But even this runs into two challenges. One is determining which sources on the web are authoritative, and the other is synthesizing different documents without triggering the tendency for fabricating information.

Writing assistance

Social media companies are also exploring writing assistance for users using chatbots. LinkedIn incorporated AI-based article prompts, user profiles, and job descriptions. [95, 96] Twitter rival Koo launched a feature to compose posts using ChatGPT. [97]

While such tools can be useful, especially for people writing in languages they aren't fluent in, they also allow users to easily create spam and could lead to the proliferation of low-quality content on platforms. We're already seeing examples. [98] Note that disinformation is intended to deliberately deceive, while spam is intended to make money by driving clicks.

IV. Speculative Ideas

Let’s now look at a few ways in which generative AI could be more deeply integrated into platforms. These ideas are speculative, but we think such speculation can be useful for researchers and civil society. It can allow us to anticipate risks before they materialize at a large scale and can also point to potentially beneficial ideas worth researching.

From news feeds to generated feeds

What will happen when platforms mix generative AI with personalized news feeds? We can't say for sure, but one vision of such a future is that platforms will start creating content and putting it in your feed instead of merely recommending content created by other users.

Why would platforms create content when millions of people create content for free? Because platforms can fine-tune generative models on users' past data (and regular creators don’t have access to such data). Recommender systems are already great at sifting through content uploaded by other users and picking what would be appealing to you. Using generative AI would allow platforms to generate personalized content that an individual finds addictive. [99] Today, this would be expensive to do for all social media users. But apart from cost, there are no technical limitations.

Personalized ads

Google and Meta are already planning to use generative AI in ads on their platforms. [100, 101] For now, generative AI is being pitched as a tool for advertisers to create better visuals. [102] But similar to generated feeds, we can imagine personalized ads that use generative AI going a step further. Instead of requiring advertisers to pick the media and text in an ad, platforms could create the content in the ads based on prompts by advertisers.

We are already seeing examples of such ads. Carvana recently created a massive personalized ad campaign using generative AI. [103] They released 1.3 million personalized videos for their past customers, including AI-generated voice from a voice actor, an animation of the specific car model they purchased, and other details like where the car was bought and what major world events took place around that time. [104] Though the resulting videos weren't very sophisticated, we expect that the quality of generative AI videos will continue getting better.

In another demo, a photographer recently created an AI-generated influencer based on simple illustrations. [105] (The innovation here is being able to generate many images that all look like the same character, which text-to-image tools can’t do out of the box. [106] ) Such AI-generated virtual personas could be used for personalized advertising based on user preferences.

There are many potential downsides to ads created by social media platforms. While personalized ads already rely on users' data for ad targeting, advertisers don't have access to sensitive user data. If social media companies start creating ads, it is plausible that these ads could manipulate users by relying on private information to generate personalized ad content. Another issue is discrimination. Tech companies have already faced lawsuits due to their discriminatory advertisements. [107] Given the widespread bias in generative AI tools, AI-generated ads could exacerbate discrimination. And as before, advertisements created using generative AI could suffer from fabrications, instead of being factually grounded, and could end up misleading users.

Conversational recommender system

The idea of a conversational user interface (UI) is that instead of using visual elements like icons and buttons to accomplish tasks, users could interact using text or voice input. Notably, voice-based assistants could become more useful by incorporating LLMs. [108–110] But a conversational UI makes sense for any kind of application, even when the user has access to a screen. For example, Windows Copilot is in part a conversational UI that works across many Windows applications. [111]

In the context of social media feeds, a conversational UI could allow users to tune the algorithm, for instance, by saying "Don’t show me political content for the next day" or "More from this poster" or "No graphic videos for a week." The main bottleneck here isn’t the tech. Rather, social media companies don’t seem to want to give users such control, instead preferring frictionless experiences.

V. Where Do We Go From Here?

We'll now look at a few recommendations for platform companies, civil society groups, researchers, and others. A key question that looms over this discussion is who will fund public interest work. Typically, the funding comes primarily from governments and philanthropies. But as we've discussed, this leaves out the companies responsible for creating these issues in the first place. We advocate for AI and platform companies to bear part of this cost.

Platform policies

It can be tempting to demand that social media platforms entirely disallow AI-generated content. Such a policy made sense when most synthetic media was malicious or intended to mislead people, such as deepfakes for creating misinformation or nonconsensual pornography. But as generative AI is adopted for creating other types of content, catch-all policies for removing AI-generated content will no longer suffice.

At a minimum, as our understanding of generative AI and its harms improves, it is important that platforms are transparent about the amount of synthetic and AI-generated media. Social media platforms already report details about content moderation decisions, for instance, the number of posts removed for hate speech or misinformation. [112] The prevalence of AI-generated content could be an important addition.

For now, classifiers for detecting AI-generated images can work to some extent, but this approach is likely to fail in the long run. One option would be to require users to label synthetic content as such. This would increase friction in the UI, and tech companies are famously resistant to increased friction in their products since it can drive down engagement. [114] But in our view, the potential harms of unlabeled generative AI outweigh the impact on the bottom line.

As we discussed, labeling AI-generated content could be aided by fingerprinting. For example, Twitter recently enabled Community Notes on misleading media, including AI-generated imagery. [115] Once an image or video is marked as misleading, the Community Note will automatically appear when it appears in a different tweet—even if the original tweet isn't linked. Such measures could be especially impactful if examples of known manipulated or misleading images are shared across platforms. (Fingerprints of known NCII are already shared across platforms to automatically remove such content. [116] )

It's also worth asking if content moderation policies should be any different for AI-generated content versus other content. A convincing fake video of the president is equally harmful whether it's AI-generated or an actor in a really good face mask.

Many of the potential use cases we discussed in this post involve social media platforms generating personalized content to serve users. Personalized AI-generated content could be addictive, it comes with privacy risks, and its impact on users is as yet unknown. Early attempts at optimizing LLMs for user engagement have shown them to be very effective. A recent paper shows that chatbots optimized for engagement led to 30% higher user retention. [117] Addiction is a serious risk of AI on social media. We recommend that any product features involving personalized AI-generated content should be considered high-risk: There should be a high bar for transparency, consent, and oversight before platforms even begin to experiment with these.

Civil society

This focus on malicious uses of generative AI is important. But just as important are applications that are in the gray area, for which we don't yet know enough to say whether they would be useful (for instance, pro-social chatbots). One thing we do know is that generative AI will be broadly deployed in such applications soon. Thinking ahead about the potential harms from these applications now, instead of after they're deployed, can help us evaluate them, raise the alarm about harmful ideas now, and proactively assess their usefulness instead of reacting to social media platforms.

For instance, as mentioned earlier, one study suggests that rephrasing messages in politically divisive conversations can be effective. But the scope of possible interventions is huge: Which ones are likely to be most effective? Similarly, the study we discussed was an online experiment. Real-world studies of platforms actually adopting these interventions would be much more useful. Without such studies, it is unclear if implementing such solutions can be useful at all.

Finally, social media is also undergoing a lot of changes. Some scholars have argued that new types of social media that focus on smaller, more cohesive communities can fix some of the thorniest issues with today's platforms. [118] For researchers, it's important to both think about patching the current broken paradigm and to think about new designs that alleviate these concerns in the first place.

Public education about generative AI

People gauge the authenticity of content by relying in part on its form—the authoritative tone and grammatical accuracy of a piece of text, or the realism of an image. All of a sudden, this no longer works. [119] For a while, it was possible to detect AI-generated images of people by looking at the hands, but recent versions have made this method obsolete. [120] AI-generated videos look nothing like real ones, but perhaps not for long.

Educating people about this has become a critical component of digital literacy. The pace of change in AI might seem daunting. But it’s also an opportunity. Direct interaction with generative AI is the best way to understand what is and isn’t possible using these tools. So experts should encourage people to experiment with these tools.

Similarly, AI-generated content that proliferates on social media is an opportunity for educating the public. Take the AI-generated images of the pope in a puffer jacket, which went viral in March 2023. [121] Even the pope weighed in on the need to develop AI responsibly. [122] Researchers and civil society groups should use these opportunities to level up public discourse around generative AI. And if platforms prominently label known examples of synthetic media, such images can increase awareness of how good generative AI has become.

Telling people to be skeptical is only the starting point. The harder question is how to decide what to trust. If we can’t rely on the content itself, the trustworthiness of the source becomes much more important. This is particularly challenging at a time of increased polarization and decreased trust in the media and other institutions. It is quickly becoming standard practice for the powerful to try to discount damaging information by claiming that it is AI-generated. [123] There are no easy answers.

Acknowledgements. We are grateful to Alex Abdo, Katy Glenn Bass, Ben Kaiser, Jameel Jaffer, Andrés Monroy-Hernández, and Roy Rinberg for their insightful comments that helped shape this essay.

© 2023, Sayash Kapoor & Arvind Narayanan.

Cite as: Sayash Kapoor & Arvind Narayanan, How to Prepare for the Deluge of Generative AI on Social Media, 23-04 Knight First Amend. Inst. (Jun. 16, 2023), https://knightcolumbia.org/content/how-to-prepare-for-the-deluge-of-generative-ai-on-social-media [https://perma.cc/8GBK-NL94].

References

1. J. Haidt and E. Schmidt, AI Is About to Make Social Media (Much) More Toxic. The Atlantic, 2023. https://www.theatlantic.com/technology/archive/2023/05/generative-ai-social-media-integration-dangers-disinformation-addiction/673940/

2. X. Pan, et al., Drag Your GAN: Interactive Point-based Manipulation on the Generative Image Manifold. ACM SIGGRAPH 2023 Conference Proceedings. https://vcai.mpi-inf.mpg.de/projects/DragGAN/

3. M. Wong, We Haven’t Seen the Worst of Fake News. The Atlantic, 2022. https://www.theatlantic.com/technology/archive/2022/12/deepfake-synthetic-media-technology-rise-disinformation/672519/

4. R. DiResta, The Supply of Disinformation Will Soon Be Infinite. The Atlantic, 2020. https://www.theatlantic.com/ideas/archive/2020/09/future-propaganda-will-be-computer-generated/616400/

5. N. Diakopoulos and D. Johnson, How Could Deepfakes Impact the 2020 U.S. Elections?, in Nieman Lab. https://www.niemanlab.org/2019/06/how-could-deepfakes-impact-the-2020-u-s-elections/.

6. T. Mak and D. Temple-Raston, Where Are The Deepfakes In This Presidential Election?, in NPR. 2020. https://www.npr.org/2020/10/01/918223033/where-are-the-deepfakes-in-this-presidential-election.

7. J.A. Goldstein, et al., Generative Language Models and Automated Influence Operations: Emerging Threats and Potential Mitigations. 2023, arXiv. https://arxiv.org/abs/2301.04246.

8. R. DiResta, et al., In Bed with Embeds: How a Network Tied to IRA Operations Created Fake “Man on the Street” Content Embedded in News Articles (TAKEDOWN). Stanford Internet Observatory, 2021. https://cyber.fsi.stanford.edu/io/publication/bed-embeds

9. See A. Narayanan and S. Kapoor, The LLaMA is out of the bag. Should we expect a tidal wave of disinformation?, in AI Snake Oil. 2023. https://aisnakeoil.substack.com/p/the-llama-is-out-of-the-bag-should.

10. B. Collins, Fake picture of explosion at Pentagon spooks Twitter. NBC News, 2023. https://www.nbcnews.com/tech/misinformation/fake-picture-explosion-pentagon-spooks-twitter-rcna85659

11. Arlington Fire and EMS, in Twitter. 2023. https://twitter.com/ArlingtonVaFD/status/1660653619954294786.

12. B. Edwards, Fake Pentagon “explosion” photo sows confusion on Twitter. Ars Technica, 2023. https://arstechnica.com/information-technology/2023/05/ai-generated-image-of-explosion-near-pentagon-goes-viral-sparks-brief-panic/

13. R. DiResta, in Twitter. 2023. https://twitter.com/noUpside/status/1660658703836778496.

14. J. Vincent, DeSantis attack ad uses fake AI images of Trump embracing Fauci. 2023. https://www.theverge.com/2023/6/8/23753626/deepfake-political-attack-ad-ron-desantis-donald-trump-anthony-fauci.

15. S. Fischer, Big Tech rolls back misinformation measures ahead of 2024. 2023. https://www.axios.com/2023/06/06/big-tech-misinformation-policies-2024-election.

16. G. Eady, et al., Exposure to the Russian Internet Research Agency foreign influence campaign on Twitter in the 2016 US election and its relationship to attitudes and voting behavior. Nature Communications, 2023. 14(1).. https://www.nature.com/articles/s41467-022-35576-9

17. M. Bossetta, The Weaponization of Social Media: Spear Phishing and Cyberattacks on Democracy. Columbia Journal of International Affairs, 2018. https://jia.sipa.columbia.edu/weaponization-social-media-spear-phishing-and-cyberattacks-democracy

18. J. Hazell, Large Language Models Can Be Used To Effectively Scale Spear Phishing Campaigns. 2023, arXiv. https://arxiv.org/abs/2305.06972.

19. Safely open apps on your Mac. Apple Support, 2021. https://support.apple.com/en-us/HT202491

20. C. Marcho, IE7 - Introducing the Phishing Filter - Microsoft Community Hub. 2007. https://techcommunity.microsoft.com/t5/ask-the-performance-team/ie7-introducing-the-phishing-filter/ba-p/372327

21. Gmail Email Security & Privacy Settings - Google Safety Center. https://safety.google/gmail/

22. S. Marlow, Fighting spam with Haskell, in Engineering at Meta. 2015. https://engineering.fb.com/2015/06/26/security/fighting-spam-with-haskell/.

23. H. Ajder, G. Patrini, and F. Cavalli, Automating Image Abuse: Deepfake Bots on Telegram. Sensity, 2020.

24. K. Hao, Deepfake porn is ruining women’s lives. Now the law may finally ban it. MIT Technology Review. 2021. https://www.technologyreview.com/2021/02/12/1018222/deepfake-revenge-porn-coming-ban/

25. S. Joshi, They Follow You on Instagram, Then Use Your Face To Make Deepfake Porn in This Sex Extortion Scam, in Vice. 2021. https://www.vice.com/en/article/z3x9yj/india-instagram-sextortion-phishing-deepfake-porn-scam.

26. J. Vincent, Blackmailers are using deepfaked nudes to bully and extort victims, warns FBI. 2023. https://www.theverge.com/2023/6/8/23753605/ai-deepfake-sextortion-nude-blackmail-fbi-warning.

27. M. Elias, A deepfake porn scandal has rocked the streaming community. Is Australian law on top of the issue? SBS News. 2023. https://www.sbs.com.au/news/the-feed/article/a-streamer-was-caught-looking-at-ai-generated-porn-of-female-streamers-the-story-just-scratches-the-surface/vfb2936ml

28. S. Emmanuelle, Sharing deepfake pornography could soon be illegal in America. ABC News. 2023. https://abcnews.go.com/Politics/sharing-deepfake-pornography-illegal-america/story?id=99084399

29. M.P. Ministry of Justice and The Rt Hon Dominic Raab, New laws to better protect victims from abuse of intimate images. GOV.UK, 2022. https://www.gov.uk/government/news/new-laws-to-better-protect-victims-from-abuse-of-intimate-images

30. S. Reid, The Deepfake Dilemma: Reconciling Privacy and First Amendment Protections. 2020: Rochester, NY. https://papers.ssrn.com/abstract=3636464.

31. Stop Non-Consensual Intimate Image Abuse | StopNCII.org. https://stopncii.org/

32. D. Scott, Deepfake Porn Nearly Ruined My Life. ELLE, 2020. https://www.elle.com/uk/life-and-culture/a30748079/deepfake-porn/

33. J. J. Roberts, Fake Porn Videos Are Terrorizing Women. Do We Need a Law to Stop Them? Fortune. 2019. https://fortune.com/2019/01/15/deepfakes-law/

34. E. Kocsis, Deepfakes, Shallowfakes, and the Need for a Private Right of Action Comments. PennState Law Review, 2021. 126(2): p. 621-650. https://heinonline.org/HOL/P?h=hein.journals/dknslr126&i=633

35. K. Kobriger, et al., Out of Our Depth with Deep Fakes: How the Law Fails Victims of Deep Fake Nonconsensual Pornography. Richmond Journal of Law & Technology, 2021. 28(2): p. 204-253. https://heinonline.org/HOL/P?h=hein.journals/jolt28&i=204

36. A. Kohli, AI Voice Cloning Is on the Rise. Here's What to Know. Time, 2023. https://time.com/6275794/ai-voice-cloning-scams-music/

37. ElevenLabs - Prime AI Text to Speech | Voice Cloning. https://beta.elevenlabs.io/

38. C. Stupp, Fraudsters Used AI to Mimic CEO’s Voice in Unusual Cybercrime Case. WSJ. 2019.https://www.wsj.com/articles/fraudsters-use-ai-to-mimic-ceos-voice-in-unusual-cybercrime-case-11567157402

39. P. Verma, They thought loved ones were calling for help. It was an AI scam, in Washington Post. 2023. https://www.washingtonpost.com/technology/2023/03/05/ai-voice-scam/.

40. F. Karimi, 'Mom, these bad men have me': She believes scammers cloned her daughter's voice in a fake kidnapping. CNN, 2023. https://www.cnn.com/2023/04/29/us/ai-scam-calls-kidnapping-cec/index.html

41. Oh S.S., Kim K.-A., Kim M., Oh J., Chu S.H., Choi J. Measurement of digital literacy among older adults: Systematic review. J. Med. Internet Res. 2021;23:e26145. doi: 10.2196/26145.

42. Coalition for Content Provenance and Authenticity. https://c2pa.org/

43. K.J.K. Feng, et al., Examining the Impact of Provenance-Enabled Media on Trust and Accuracy Perceptions. 2023, arXiv. https://arxiv.org/abs/2303.12118.

44. J. Kirchenbauer, et al., A Watermark for Large Language Models. 2023, arXiv. https://arxiv.org/abs/2301.10226.

45. Stable Diffusion. 2023, CompVis - Computer Vision and Learning LMU Munich. https://github.com/CompVis/stable-diffusion.

46. S. Aaronson, My AI Safety Lecture for UT Effective Altruism, in Shtetl-Optimized. 2022. https://scottaaronson.blog/?p=6823.

47. E. Mitchell, et al., DetectGPT: Zero-Shot Machine-Generated Text Detection using Probability Curvature. 2023, arXiv. https://arxiv.org/abs/2301.11305.

48. J. H. Kirchner, et al., New AI classifier for indicating AI-written text. 2023. https://openai.com/blog/new-ai-classifier-for-indicating-ai-written-text

49. For an overview of deepfake detection tools, see M. DeGeurin, 12 Companies Racing to Create AI Deepfake Detectors. Gizmodo, 2023. https://gizmodo.com/chatgpt-ai-12-companies-deepfake-video-image-detectors-1850480813

50. T. Hsu and S.L. Myers, Another Side of the A.I. Boom: Detecting What A.I. Makes, in The New York Times. 2023. https://www.nytimes.com/2023/05/18/technology/ai-chat-gpt-detection-tools.html.

51. K. Jimenez, Professors are using ChatGPT detector tools to accuse students of cheating. But what if the software is wrong? USA TODAY. 2023. https://www.usatoday.com/story/news/education/2023/04/12/how-ai-detection-tool-spawned-false-cheating-case-uc-davis/11600777002/

52. P. Verma, A professor accused his class of using ChatGPT, putting diplomas in jeopardy, in Washington Post. 2023. https://www.washingtonpost.com/technology/2023/05/18/texas-professor-threatened-fail-class-chatgpt-cheating/.

53. Turnyouin (@turnyouin) / Twitter. Twitter, 2023. https://twitter.com/turnyouin

54. PhotoDNA. https://www.microsoft.com/en-us/photodna

55. S. Kapoor and A. Narayanan, Artists can now opt out of generative AI. It’s not enough, in AI Snake Oil. 2023. https://aisnakeoil.substack.com/p/artists-can-now-opt-out-of-generative.

56. S. Mortimer, StopNCII.org has launched | Revenge Porn Helpline. 2021. https://revengepornhelpline.org.uk/news/stopncii-org-has-launched/

57. S. Totilo, Video games will be the proving ground for generative AI. Axios, 2023. https://www.axios.com/2023/02/23/video-games-generative-ai

58. NVIDIA Game Developer, NVIDIA ACE for Games Sparks Life Into Virtual Characters With Generative AI. 2023. https://www.youtube.com/watch?v=nAEQdF3JAJo.

59. TikTok Effect House: Smile. TikTok Effect House, 2023. https://effecthouse.tiktok.com/learn/guides/generative-effects/smile/

60. J. Peters, Google’s new Magic Editor uses AI to totally transform your photos. The Verge, 2023. https://www.theverge.com/2023/5/10/23716165/google-photos-ai-magic-editor-transform-pixel-io

61. D.A. de Vries, et al., Social Comparison as the Thief of Joy: Emotional Consequences of Viewing Strangers’ Instagram Posts. Media Psychology, 2018. 21(2): p. 222-245. https://doi.org/10.1080/15213269.2016.1267647

62. M. Kleemans, et al., Picture Perfect: The Direct Effect of Manipulated Instagram Photos on Body Image in Adolescent Girls. Media Psychology, 2018. 21(1): p. 93-110. https://doi.org/10.1080/15213269.2016.1257392

63. B. Allyn, Does the 'Bold Glamour' filter push unrealistic beauty standards? TikTokkers think so, in NPR. 2023. https://www.npr.org/2023/03/10/1162286785/does-the-bold-glamour-filter-push-unrealistic-beauty-standards-tiktokkers-think-.

64. Dall-e 2, in r/dalle2. 2022. www.reddit.com/r/dalle2

https://www.reddit.com/r/dalle2/comments/unhz7k/dalle_2_faq_please_start_here_before_submitting_a/.

65. B. Thompson, DALL-E, the Metaverse, and Zero Marginal Content. Stratechery by Ben Thompson, 2022. https://stratechery.com/2022/dall-e-the-metaverse-and-zero-marginal-content/

66. A. Malik, TikTok is testing an in-app tool that creates generative AI avatars, in TechCrunch. 2023. https://techcrunch.com/2023/04/26/tiktok-is-testing-an-in-app-tool-that-creates-generative-ai-avatars/.

67. A. Paluzzi, in Twitter. 2023. https://twitter.com/alex193a/status/1653857282742972416

68. B. Edwards, Ethical AI art generation? Adobe Firefly may be the answer. Ars Technica, 2023. https://arstechnica.com/information-technology/2023/03/ethical-ai-art-generation-adobe-firefly-may-be-the-answer/

69. AI Open Letter — CAIR. 2023. https://artisticinquiry.org/AI-Open-Letter

https://artisticinquiry.org/ai-open-letter

70. L. Taylor, Amnesty International criticised for using AI-generated images, in The Guardian. 2023. https://www.theguardian.com/world/2023/may/02/amnesty-international-ai-generated-images-criticism.

71. Shutterstock: Transform AI Art from Text for Free. Shutterstock. https://www.shutterstock.com/ai-image-generator

72. M. Growcoot, Street Photographer Uses AI Face Swap to Hide His Subjects' Identites. PetaPixel, 2023. https://petapixel.com/2023/05/25/street-photographer-uses-ai-face-swap-to-hide-his-subjects-identites/

73. A. Blattmann, et al., Align your Latents: High-Resolution Video Synthesis with Latent Diffusion Models. 2023, arXiv. https://arxiv.org/abs/2304.08818.

74. Text-to-video editing. Runway. https://runwayml.com/text-to-video/

75. J. Vincent, Google demos two new text-to-video AI systems, focusing on quality and length. The Verge, 2022. https://www.theverge.com/2022/10/6/23390607/ai-text-to-video-google-imagen-phenaki-new-research

76. U. Singer, et al., Make-A-Video: Text-to-Video Generation without Text-Video Data. 2022, arXiv. https://arxiv.org/abs/2209.14792.

77. Autoped. ScooterManiac - All classic scooters. https://web.archive.org/web/20110724144543/http://www.scootermaniac.org/index.php?op=modele&cle=173

78. Beauty sharer (@beautysharer168). TikTok. https://www.tiktok.com/@beautysharer168

79. delicious_yst on TikTok. TikTok. https://www.tiktok.com/@born_of_you_yst/video/7222643722410888490

80. A. Deck, TikTok creators use AI to rewrite history. Rest of World, 2023. https://restofworld.org/2023/ai-tiktok-creators-rewrite-history/

81. E. Dickson, AI Deepfakes of True-Crime Victims Are a Waking Nightmare, in Rolling Stone. 2023. https://www.rollingstone.com/culture/culture-features/true-crime-tiktok-ai-deepfake-victims-children-1234743895/.

82. D. Hangartner, et al., Empathy-based counterspeech can reduce racist hate speech in a social media field experiment. Proceedings of the National Academy of Sciences, 2021. 118(50): p. e2116310118. https://www.pnas.org/doi/10.1073/pnas.2116310118

83. P. Dangerous Speech, Counterspeech. Dangerous Speech Project, 2017. https://dangerousspeech.org/counterspeech/

84. K. Coleman, Introducing Birdwatch, a community-based approach to misinformation. 2021. https://blog.twitter.com/en_us/topics/product/2021/introducing-birdwatch-a-community-based-approach-to-misinformation

85. T. Lorenz, W. Oremus, and J.B. Merrill, How Twitter’s contentious new fact-checking project really works, in Washington Post. 2022. https://www.washingtonpost.com/technology/2022/11/09/twitter-birdwatch-factcheck-musk-misinfo/.

86. K. Weise and C. Metz, When A.I. Chatbots Hallucinate, in The New York Times. 2023. https://www.nytimes.com/2023/05/01/business/ai-chatbots-hallucination.html.

87. OpenAi, GPT-4 Technical Report. 2023, arXiv. https://arxiv.org/abs/2303.08774.

88. R. Parasuraman and D.H. Manzey, Complacency and bias in human use of automation: an attentional integration. Human Factors, 2010. 52(3): p. 381-410. http://www.ncbi.nlm.nih.gov/pubmed/21077562

89. N.F. Cypris, et al., Intervening Against Online Hate Speech: A Case for Automated Counterspeech. Institute for Ethics in Artificial Intelligence, Technical University of Munich School of Social Science and Technology. 2022.

90. B. Schneier, H. Farrell, and N.E. Sanders, How Artificial Intelligence Can Aid Democracy, in Slate. 2023. https://slate.com/technology/2023/04/ai-public-option.html.

91. L.P. Argyle, et al., AI Chat Assistants can Improve Conversations about Divisive Topics. 2023, arXiv. https://arxiv.org/abs/2302.07268.

92. M. Jakesch, et al. Co-Writing with Opinionated Language Models Affects Users’ Views. in CHI '23: CHI Conference on Human Factors in Computing Systems. 2023. ACM. https://dl.acm.org/doi/10.1145/3544548.3581196

93. F. Lardinois, Microsoft brings the new AI-powered Bing to mobile and Skype, gives it a voice, in TechCrunch. 2023. https://techcrunch.com/2023/02/22/microsoft-brings-the-new-ai-powered-bing-to-mobile-and-skype/.

94. Salesforce, Introducing the ChatGPT App for Slack. Salesforce, 2023. https://www.salesforce.com/news/stories/chatgpt-app-for-slack/

95. M. Clark, LinkedIn’s flood of ‘collaborative’ articles start out with AI prompts. The Verge, 2023. https://www.theverge.com/2023/3/4/23624241/linkedin-collaborative-articles-ai-prompts-content

96. M. Sato, LinkedIn is adding AI tools for generating profile copy and job descriptions. The Verge, 2023. https://www.theverge.com/2023/3/15/23640947/linkedin-ai-profile-job-description-tools

97. M.S. Durga, Can ChatGPT write better social media posts than you? Rest of World, 2023. https://restofworld.org/2023/can-chatgpt-write-better-social-media-posts-than-you/

98. Linus, in Twitter. 2023. https://twitter.com/LinusEkenstam/status/1662073874425544706.

99. AI/ML Media Advocacy Summit Keynote: Steven Zapata. https://www.youtube.com/watch?v=puPJUbNiEKg&t=1251s.

100. I. Mehta, Meta wants to use generative AI to create ads, in TechCrunch. 2023. https://techcrunch.com/2023/04/05/meta-wants-to-use-generative-ai-to-create-ads/.

101. H. Murphy and C. Criddle, Google to deploy generative AI to create sophisticated ad campaigns, in Financial Times. 2023. https://www.ft.com/content/36d09d32-8735-466a-97a6-868dfa34bdd5.

102. I. Mehta, Meta announces generative AI features for advertisers, in TechCrunch. 2023. https://techcrunch.com/2023/05/11/meta-announces-generative-ai-features-for-advertisers/.

103. Carvana Thanks Customers with One-of-a-Kind Videos Detailing the Day They Met Their Car. 2023. https://www.businesswire.com/news/home/20230509005451/en/Carvana-Thanks-Customers-with-One-of-a-Kind-Videos-Detailing-the-Day-They-Met-Their-Car

104. Twitter, 2023. https://twitter.com/frantzfries/status/1661359327637131264

105. M. Growcoot, Photographer Creates Lifelike Social Media Influencer Using Only AI. PetaPixel, 2023. https://petapixel.com/2023/05/23/photographer-creates-lifelike-social-media-influencer-using-only-ai/

106. L. Zhang and M. Agrawala, Adding Conditional Control to Text-to-Image Diffusion Models. 2023, arXiv. https://arxiv.org/abs/2302.05543.

107. M. DeGeurin, Meta Releases New Ad System After Housing Discrimination Suit. Gizmodo, 2023. https://gizmodo.com/facebook-meta-housing-discrimination-lawsuit-ad-system-1849967454

108. J. Kastrenakes, Humane’s new wearable AI demo is wild to watch — and we have lots of questions. The Verge, 2023. https://www.theverge.com/2023/5/9/23716996/humane-wearable-ai-tech-demo-ted-video

109. J. Peters, Amazon is working on an improved LLM to power Alexa. The Verge, 2023. https://www.theverge.com/2023/4/27/23701476/amazon-is-working-on-an-improved-llm-to-power-alexa

110. W. Ma, Apple’s AI Chief Struggles With Turf Wars as New Era Begins. The Information. 2023. https://www.theinformation.com/articles/apples-siri-chief-struggles-as-new-ai-era-begins

111. P. Panay, Bringing the power of AI to Windows 11 – unlocking a new era of productivity for customers and developers with Windows Copilot and Dev Home. Windows Developer Blog, 2023. https://blogs.windows.com/windowsdeveloper/2023/05/23/bringing-the-power-of-ai-to-windows-11-unlocking-a-new-era-of-productivity-for-customers-and-developers-with-windows-copilot-and-dev-home/

112. Facebook, Community Standards Enforcement Report. https://transparency.fb.com/data/community-standards-enforcement/

113. A. Engler, The EU and U.S. diverge on AI regulation: A transatlantic comparison and steps to alignment, in Brookings. 2023. https://www.brookings.edu/research/the-eu-and-us-diverge-on-ai-regulation-a-transatlantic-comparison-and-steps-to-alignment/.

114. M. Tomalin, Rethinking online friction in the information society. Journal of Information Technology, 2023. 38(1): p. 2-15. https://doi.org/10.1177/02683962211067812

115. N. Community, in Twitter. 2023. https://twitter.com/CommunityNotes/status/1663609484051111936.

116. Industry Partners | StopNCII.org. https://stopncii.org/partners/industry-partners/

117. R. Irvine, et al., Rewarding Chatbots for Real-World Engagement with Millions of Users. 2023, arXiv. https://arxiv.org/abs/2303.06135.

118. C. Rajendra-Nicolucci, M, Sugarman, and E. Zuckerman. The Three-Legged Stool: A Manifesto for a Smaller, Denser Internet. 2023; Available from: https://publicinfrastructure.org/2023/03/29/the-three-legged-stool/.

119. reciperobotai, This time it's slightly harder. One is Midjourney 5.1, the other is real. Which one is which?, in r/midjourney. 2023. www.reddit.com/r/midjourney/comments/13cq2wz/this_time_its_slightly_harder_one_is_midjourney/

120. P. Dixit, AI Image Generators Keep Messing Up Hands. Here's Why. BuzzFeed News, 2023. https://www.buzzfeednews.com/article/pranavdixit/ai-generated-art-hands-fingers-messed-up

121. A. Wong, Paparazzi Photos Were the Scourge of Celebrities. Now, It’s AI, in Wall Street Journal. 2023. https://www.wsj.com/articles/ai-photos-pope-francis-celebrities-dfb61f1d.

122. D. C. Lubov, Pope Francis urges ethical use of artificial intelligence - Vatican News. 2023. https://www.vaticannews.va/en/pope/news/2023-03/pope-francis-minerva-dialogues-technology-artificial-intelligenc.html

123. R. Michaelson, Turkish presidential candidate quits race after release of alleged sex tape, in The Guardian. 2023. https://www.theguardian.com/world/2023/may/11/muharrem-ince-turkish-presidential-candidate-withdraws-alleged-sex-tape.

In a 2019 article, Diakopoulos and Johnson described seven ways in which deepfakes could impact the 2020 elections. It was interesting to see that these concerns largely didn't come to pass, despite being technically feasible.[5, 6]

Nonconsensual deepfakes shouldn't be confused with regular AI-generated pornography, which does not depict real people. A subreddit devoted to posting AI-generated porn has over 65,000 members. It prohibits deepfakes.

Shannon Reid gives an overview of the First Amendment implications of regulating nonconsensual deepfakes in the U.S.[30]

It’s debatable whether voice cloning scams are dangerous even on a small scale. Although not everyone would fall prey to such scams, we think they will succeed more often than spear phishing, due to the existing phishing defenses that we mentioned. Besides, they extract a psychological toll even on nonvictims.

A year ago, after the release of DALL-E 2 by OpenAI, Ben Thompson predicted that generative AI would transform social media by drastically reducing the cost of creating content. [65]

Old Tender Man, https://www.tiktok.com/t/ZT81pgNhq/, 2023; Palette.fm, https://twitter.com/palettefm_bot/status/1655602961463099394, 2023.

To be clear, the colorization was done by AI, but the image is real. Motorized scooters existed in the 1910s

The Dangerous Speech project gives an overview of different types of counterspeech and research into its efficacy.[83]

Automated methods could include traditional machine-learning classifiers, language models used to identify variants of viral misinformation narratives, as well as fingerprinting techniques that detect known misinformation when it is posted again.

Cypris and others argue why automated counterspeech could be a helpful intervention for addressing hate speech. Their report also reviews the evidence on why and how counterspeech works.[89]

Alex Engler's analysis of generative AI regulation highlights the differences in how the EU and U.S. are regulating AI. While the EU requires transparency on the use of chatbots and around recommender systems and content moderation, the U.S. has no such regulations. [113]

Sayash Kapoor is a computer science Ph.D. candidate at Princeton.

Arvind Narayanan was the Knight Institute visiting senior research scientist for 2022-2023.